Apple Vision Pro: Revolutionizing Face ID with TrueDepth Technology

Explore how Apple's Vision Pro leverages TrueDepth technology for spatial computing. Discover how this advanced system projects and analyzes depth with precision, enhancing the integration of virtual and physical environments.

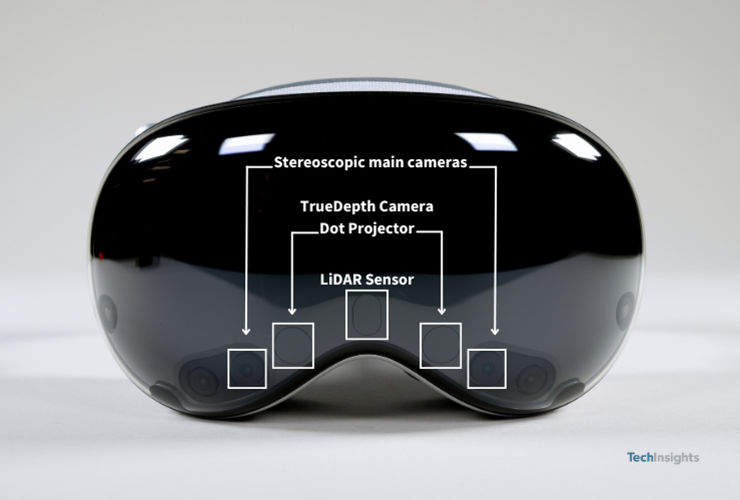

Spatial computing integrates a user’s virtual environment with their physical surroundings, and Apple's Vision Pro achieves this with a suite of advanced sensors. While LiDAR, or Light Detection and Ranging, plays a significant role in mapping the user's space, the TrueDepth camera system adds another layer of depth sensing. TrueDepth has been a cornerstone of Apple's technology since its introduction on the iPhone X, utilizing a structured light method to determine depth by projecting a pattern of dots and analyzing their reflections.

The TrueDepth camera system in the Vision Pro includes a Vertical Cavity Surface Emitting Laser (VCSEL) that projects a complex dot pattern. This pattern is captured by an infrared camera, which detects distortions caused by variations in depth. While the Vision Pro uses an evolved version of this technology from the iPhone X, it employs the same fundamental principles. The dot pattern is projected onto the user's environment, and the resulting image is analyzed to map out spatial features with high precision.

Interestingly, while Face ID on previous devices required users to create and store reference images for security, the TrueDepth system in the Vision Pro focuses solely on enhancing spatial computing. This shift highlights how Apple’s technology has evolved from user authentication to creating immersive and interactive experiences. The system’s ability to seamlessly blend virtual and physical worlds underscores its role as a powerful tool for the next generation of spatial computing.