BrainChip Adds Temporal Networks

Author: Bryon Moyer

Akida 2, BrainChip’s latest intellectual property (IP) offering, adds time as a component of convolution, allowing activity identification in video streams. It also accelerates the Transformer encoder block in hardware, speeding models employing that block.

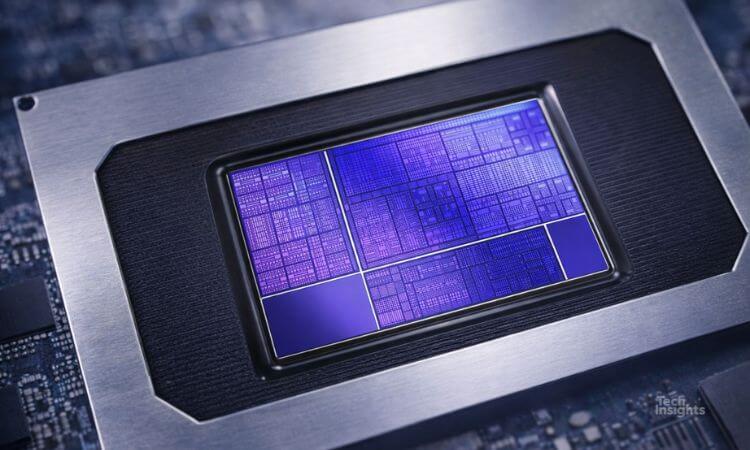

BrainChip’s artificial intelligence (AI) processors employ an event-based architecture that responds only to nonzero activations on internal layers, reducing the amount of required computation. It’s a form of neuromorphic computing that the company first implemented in its Akida 1 IP and coprocessor chip. It has now positioned the original chip as a reference chip for sale in low quantities as evaluation for possible IP licensing; no chip is planned for Akida 2.

The second generation brings four changes to the original architecture; in addition to temporal networks and Transformer encoders, it adds the INT8 data type and the ability to handle long-range forward skip connections. Akida 1 quantized aggressively to INT4 and below, but INT8 has become the most common edge inference data type; Akida 2 acknowledges that.

Since its Akida 1 launch, the company has signed Megachips and Renesas as IP customers. The company says many other prospects have evaluated the reference silicon (including Circle8 Clean Technologies for an application to improve recycling sorting); licensing decisions are pending for those companies. Given its cash and revenue position, it must boost sales to better balance its cash burn.

The company faces no event-based IP competitors, and it claims to provide lower-power inference than its standard-network IP competition. But its uniqueness makes its tool and ecosystem development critical to ensuring that its customers can implement networks without having to be aware of the unique underlying technology.