Posted: October 17, 2016

Contributing Authors: Dick James

One of the lesser-known stories of mobile phone evolution is the development of proximity sensing in order to save power and disable touch screen functions when the phone is actually being used as a phone. This essentially means turning off the screen (and the touch capability tasks) when the phone is brought up to the ear.

The Early Days

In the very early days there was no such thing, back then a simple photodiode was used to sense the change in light level. This worked in most circumstances, but under certain conditions (e.g. an old guy with a white beard) it didn’t, to the embarrassment of the manufacturers. With the screen on, of course, the touch function still works, and an inadvertent touch could hang up the call. Apparently iPhone 4 got a bad name for this particular fault.

Active Systems

As a result active systems were introduced, usually with an LED in combination with light sensors to sense the change in light as the phone comes to the face, and then confirm facial proximity with active illumination from the LED. If the proximity sensor receives reflected light above a pre-defined threshold level, it turns the screen off. This threshold level has to be set so that it functions in close to 100% of situations, irrespective of the reflectance of the adjacent surface.

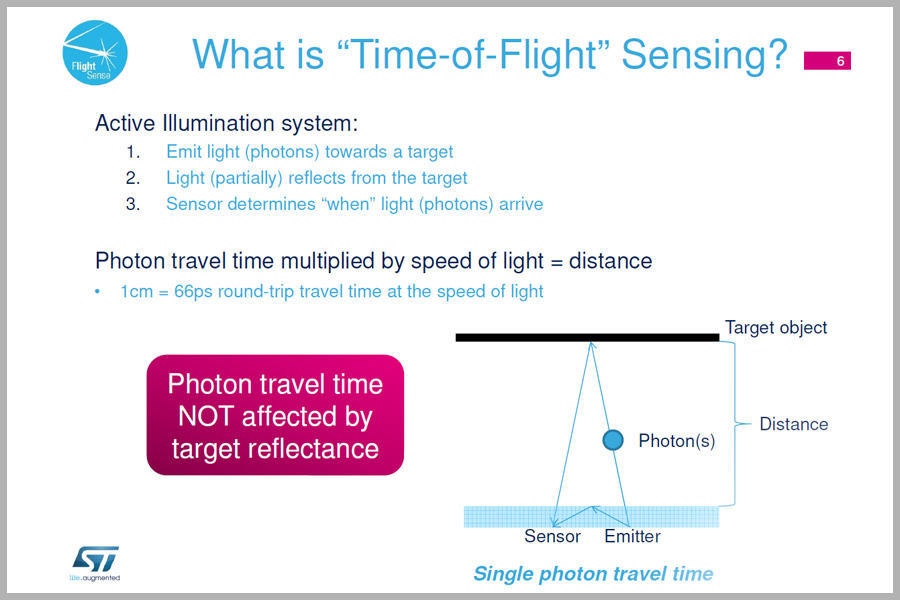

What is "Time-of-Flight" Sensing?

Time-of-Flight Sensor

Now we are seeing a further step in the sequence in the transition from the iPhone 6s to the iPhone 7. The 6s used the LED + sensors option, but the iPhone 7 appears to have gone to the next stage and introduced a time-of-flight (ToF) sensor. The advantage of ToF is that it doesn’t depend on reflected light level, instead it actually measures the time of travel of photons emitted from a laser diode, and this travel time is independent of the reflectance of the target surface.

The First ToF

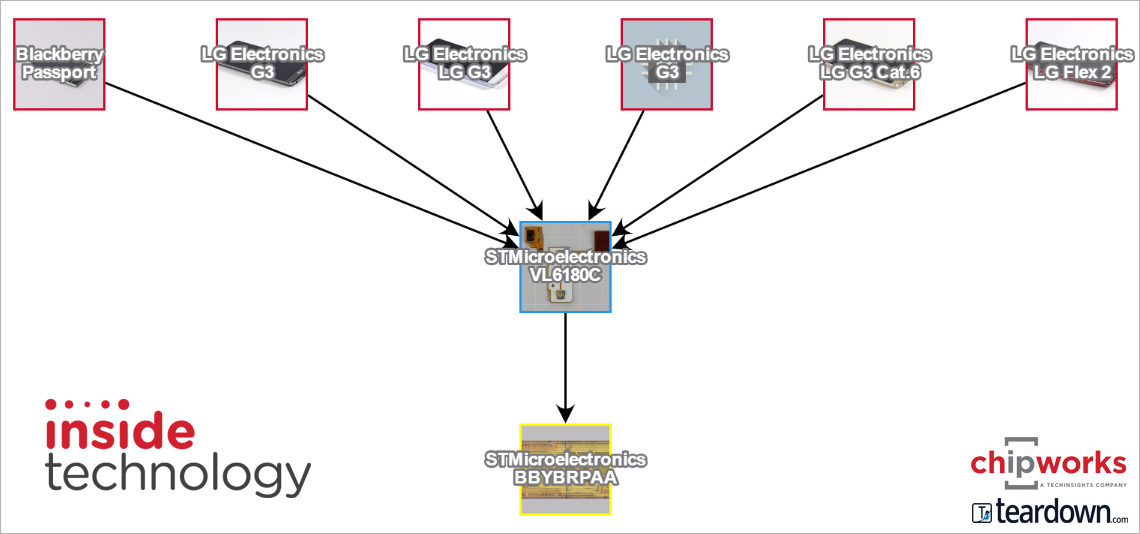

We first saw a ToF sensor in the Blackberry Passport, which introduced us to the concept, and the STMicroelectronics VL6180, a three-in-one smart optical module, incorporating a proximity sensor, an ambient light sensor, and a vertical-cavity surface-emitting laser (VCSEL) light source. We subsequently found it in a few other LG phones:

The First ToF

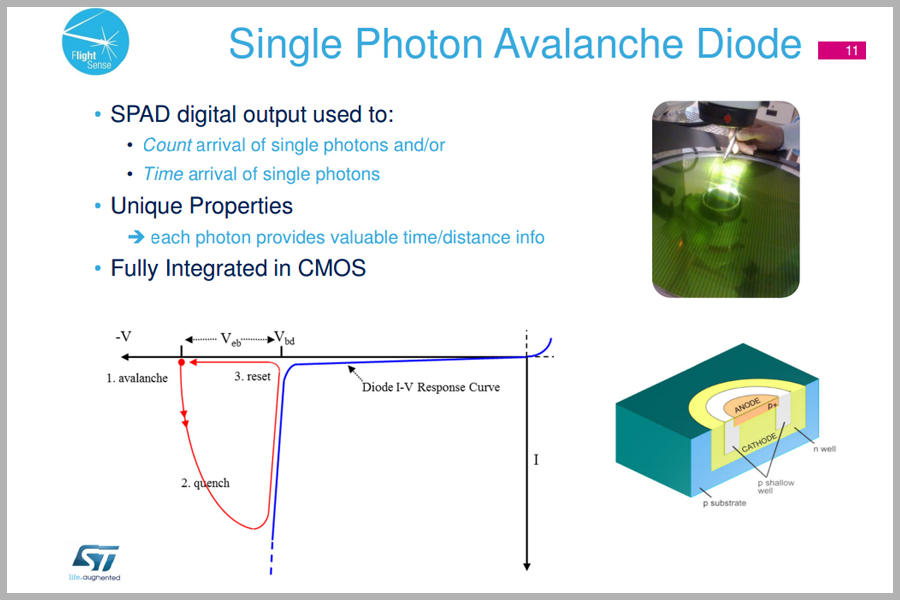

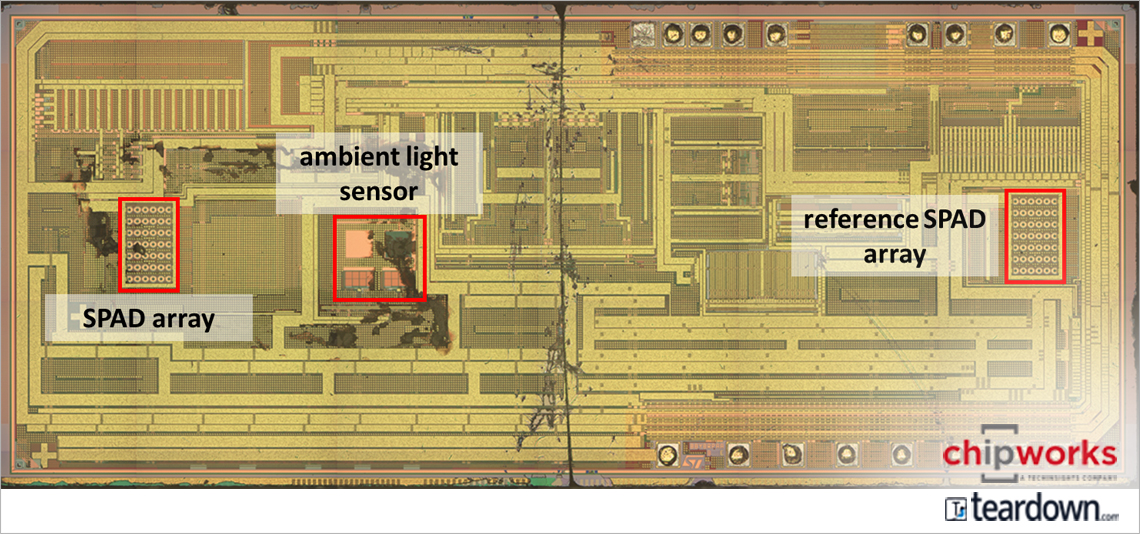

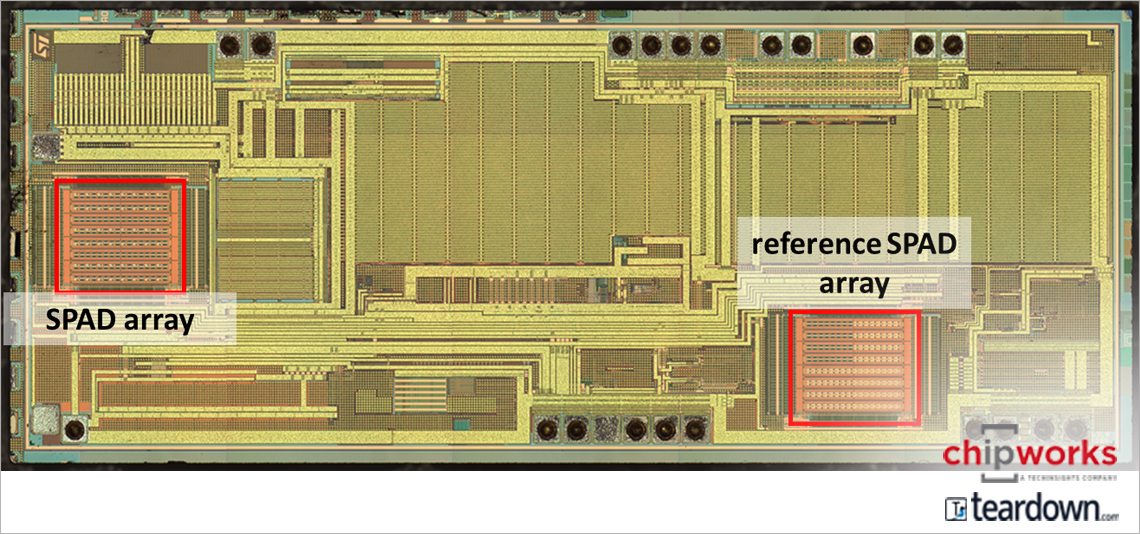

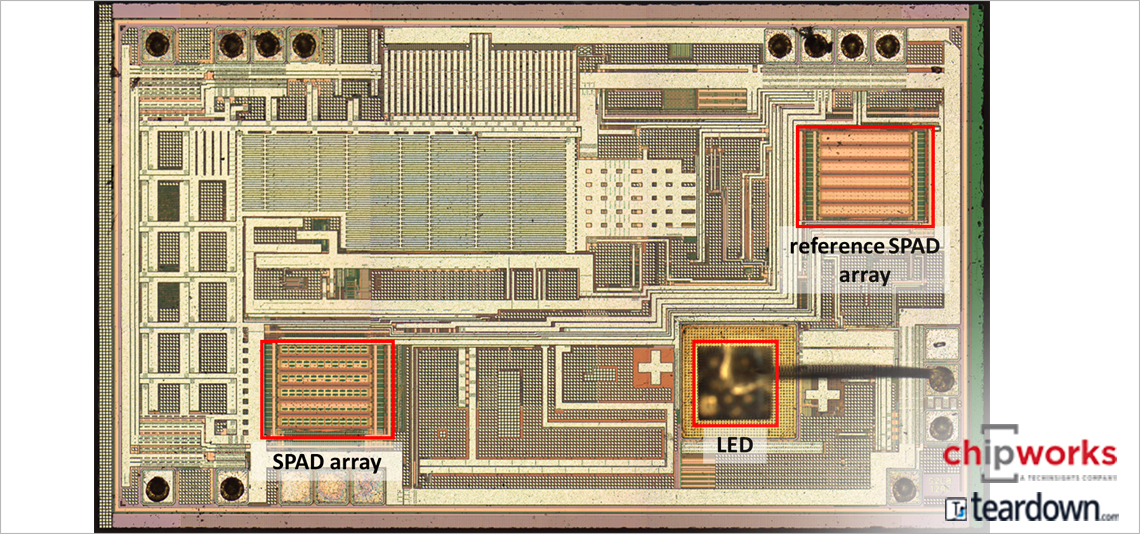

The proximity sensor is actually an array of single photon avalanche diodes (SPADs), which fortunately can be integrated into a regular CMOS process.

The VL6180 actually has two SPAD arrays on-die, together with the ambient light sensor. The VCSEL is co-packaged with the die to give the complete unit.

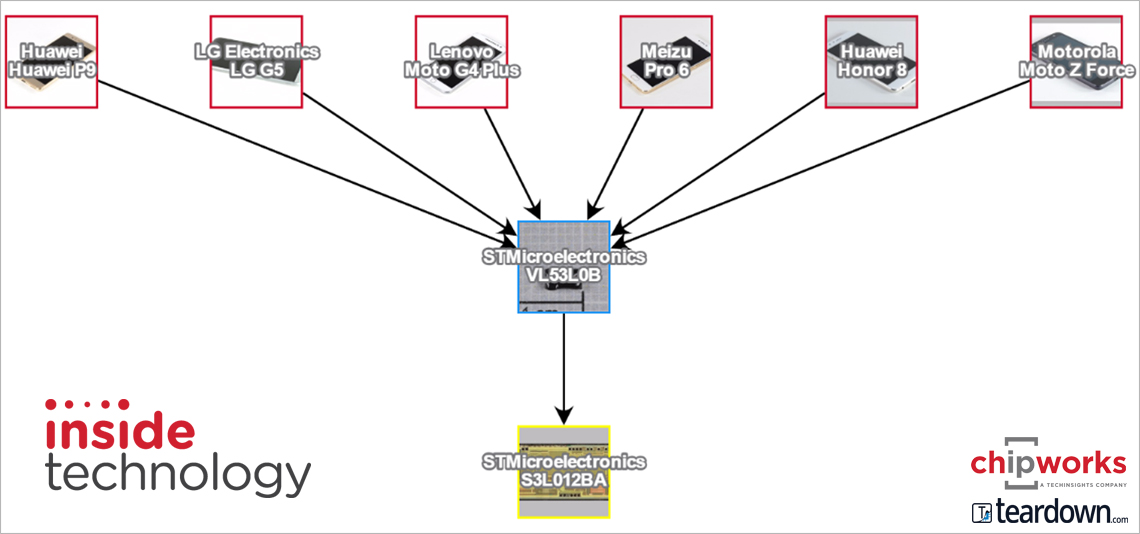

In January, STMicroelectronics announced its second-generation sensor, the VL53L0, which we found in half a dozen phones this year, all from the Asia-Pacific region (don’t forget that Motorola is now Lenovo).

Both of these were used as range-finding devices for the primary camera, not as proximity sensors for phone operation. The VL53L0 has dispensed with the ambient light sensor, and the SPAD arrays have been modified.

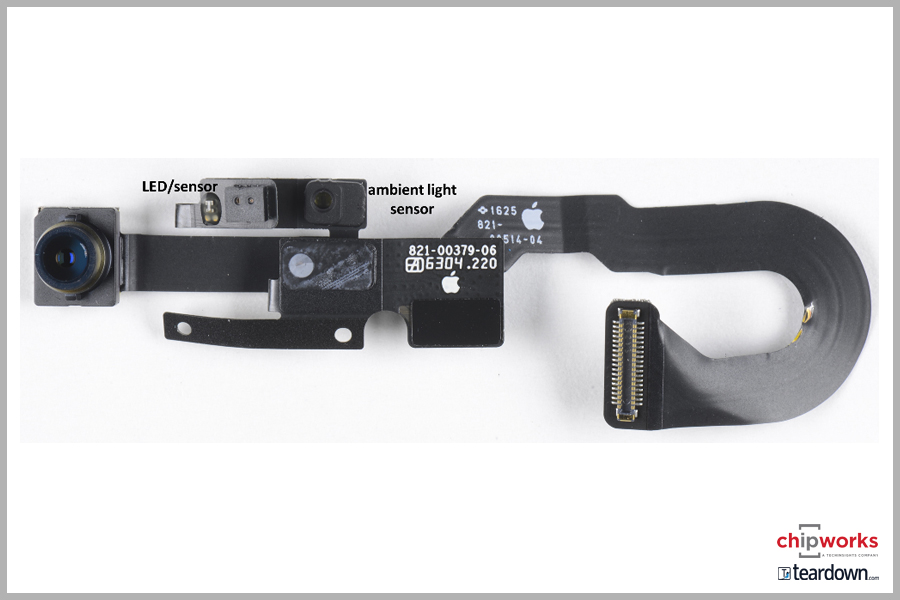

Now we get to the iPhone 7 – when we looked at the selfie camera side, and took out the sub-assembly, both the ambient light sensor and the LED/sensor module were different from those in the 6s model.

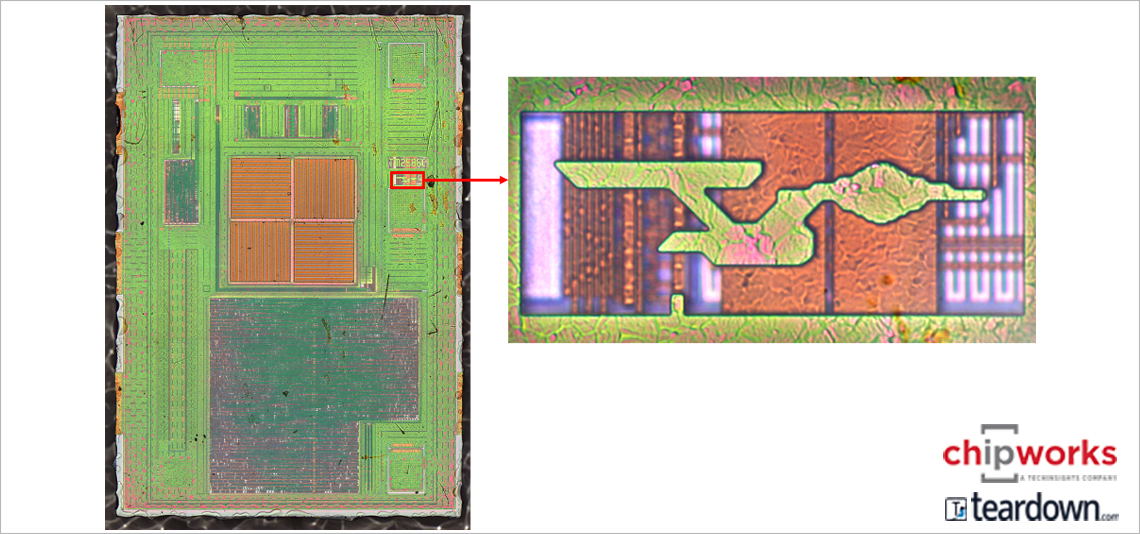

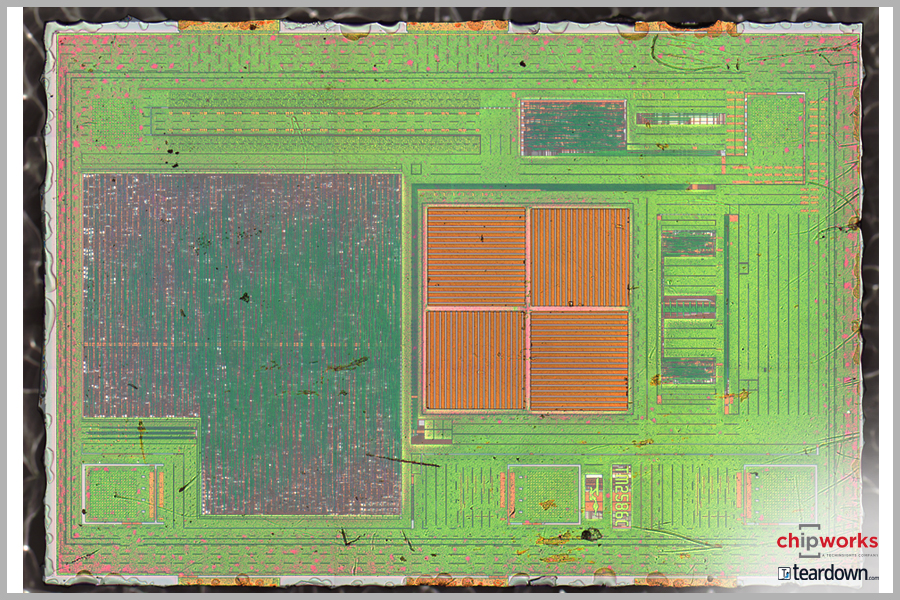

When we take them off and look at the module, it looks very ST-ish: Looking at the die, it is not the same, but definitely similar in style and die numbering (S2L012AC) to the VL53L0/S3L012BA die with the two SPAD arrays, however this time the VCSEL is bonded on top of the ToF die to give a very compact module.

Based on this we think it is safe to conclude that the proximity sensor is now a ToF sensor that can also act as an accurate rangefinder for the selfie camera. It was also in the 7 Plus, so a good design win for STMicroelectronics. So far nothing has been announced by either Apple or STMicroelectronics, but it is yet another one of the subtle improvements that we see in the evolution of mobile phones.

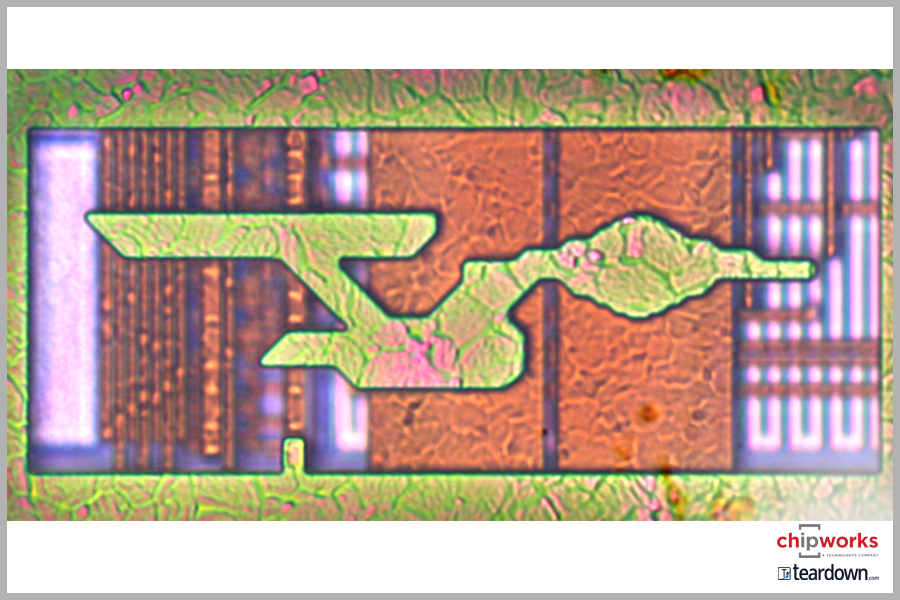

During our teardown, we also looked at the ambient light sensor, which is actually the same one that was in the iPhone 6s, and looks fairly conventional. We don’t know who makes it, but it does have Star Trek’s Starship Enterprise on board!