TechInsights’ Analysis of AMD’s MI300X Reveals Samsung HBM3

Explore the advancements in AI processors, advanced packaging, and memory technology.

Discover High Performance Computing

High Performance Computing from TechInsights is designed to help your businesses drive innovation and manage costs and risks.

Enter your information below to be notified when analysis of AMD's MI300X becomes available.

Already have a subscription?

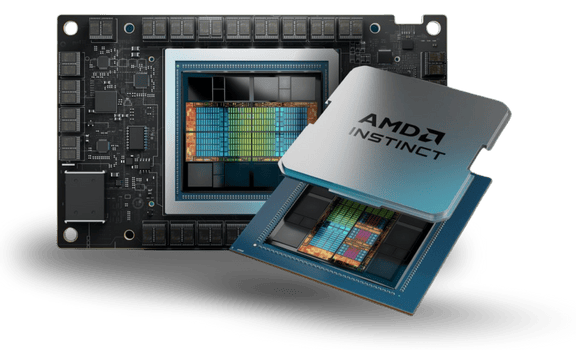

Explore High Performance ComputingTechInsights has unveiled the first commercial instance of Samsung’s HBM3 memory, integrated into AMD’s MI300X AI accelerator. Samsung announced HBM3’s availability in August 2023, and its deployment in a commercially scaled product marks a significant milestone for both the memory maker and AI chipmakers. With the increasing demand for AI chips, Samsung’s HBM3 technology brings higher bandwidth, lower latency, and improved power efficiency, making it a game-changer for high-performance AI accelerators.

This breakthrough also highlights the growing importance of advanced memory solutions for AI applications. As AI workloads continue to evolve and become more complex, the demand for faster and more efficient memory is critical. Samsung’s HBM3 addresses these needs by providing the necessary bandwidth to power next-generation AI processors, which is essential for handling the intensive computations required by machine learning, deep learning, and other data-driven applications.

TechInsights is accelerating its research into high-performance compute, with a focus on AI accelerators and data center servers. Our analysis provides valuable insights into the interplay between memory, logic, and advanced packaging, shedding light on how these technologies drive performance in AI chips. For more details on the AMD MI300X and Samsung HBM3, visit the TechInsights Platform for the full report.