Huawei

Ren Zhengfei (任正非) founded Huawei in 1987 and continues to lead as CEO. Three other executives—Guo Ping (郭平), Eric Xu (徐直军), and Ken Hu (胡厚崑)—run its three main business units and manage day-to-day operations under Ren. Huawei, one of the world’s leading communications equipment suppliers, has suffered under US sanctions that cut off its access to advanced foundry services. As a result, the company’s revenue declined sharply in 2021. Even so, it remains profitable and holds more than $20 billion in cash.

Despite briefly achieving the worldwide lead in smartphone shipments, Huawei saw its market share plummet below 4% following the sale of its low-end Honor brand and has been unable to ship many of its high-end models owing to a lack of chips. After this consumer-revenue decline, the company generates about 15% of its revenue from its enterprise business. This unit sells standard servers and networking equipment.

Through its HiSilicon subsidiary, the company designed its deep-learning architecture, called DaVinci, which appears in its Ascend products. Huawei offers the Ascend 310 for edge systems and the Ascend 910 for data centers. The DaVinci architecture supports both FP16 and INT8 data, so it can handle either training or inference. The sanctions, however, prevent the company from acquiring these chips from TSMC.

Key Features and Performance

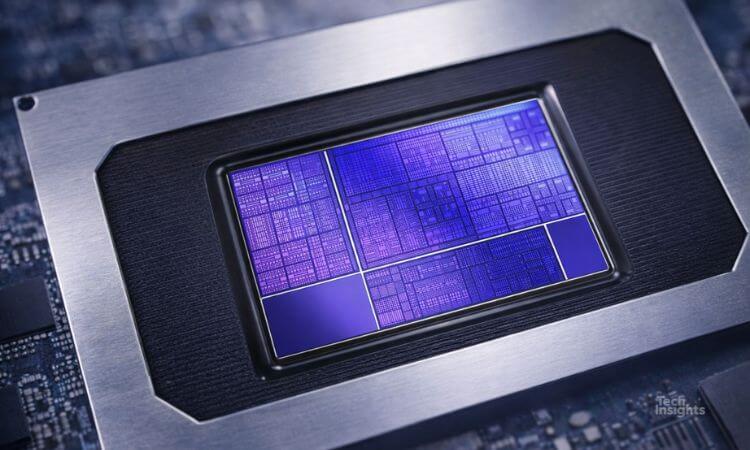

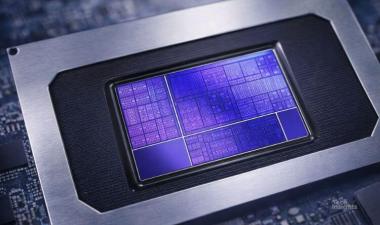

The Ascend 910 contains 32 DaVinci cores connected to a network-on-a-chip (NoC) mesh. Each core contains 4,096 units that can each perform one FP16 MAC or two INT8 MACs per cycle at 1.0 GHz. Thus, the chip has a peak performance of 256Tflop/s (FP16) or 512 TOPS (INT8). The design has a total of 84MB of on-chip SRAM, including a 32MB buffer that all the cores share. In addition, it connects to four HBM2 channels that deliver a total of 1,228GB/s of off-die memory bandwidth to 32GB of memory, enabling it to operate efficiently on large models that don’t fit in the on-die memory.

Unlike most accelerators, the processor includes 16 Arm-compatible CPU cores, allowing it to run without an external host processor. The company reused the Taishan Arm v8.2 CPU from its Kunpeng 920 server processor. All 16 cores share a 16MB L3 cache, which connects with a pair of DDR4 SDRAM channels for external memory. The CPU subsystem enables the chip to run a full operating system. The 910 also has four video decoders.

In TSMC’s 7nm EUV (N7+) technology, the compute diemeasures 456mm2 including on-die memories plus the Arm CPU complex. The Ascend 910 separates I/O functions into a 168mm2 die called Nimbus, which is likely built in an older 12nm or 16nm process to reduce cost. Nimbus provides 24 lanes of PCIe Gen4 and two different cache-coherent interfaces: CCIX and a proprietary port called HCCS. Three HCCS ports, operating at 30GB/s each, enable a fully connected four-chip cluster. For larger clusters, the chip also has dual 100Gbps Ethernet ports that handle RDMA (RoCEv2), providing a low-latency interconnect for multiple chassis. Huawei rates the Ascend 910 chip at 310Wbut the complete accelerator card at 350W.

The company has announced several products using the Ascend 910: the Atlas 300AI training card, Atlas 800 AI server, and Atlas 900 AI cluster. The Atlas 800 server integrates eight Ascend 910 modules, four Kunpeng 920 processors, and 8x100GbE ports. The Atlas 300 PCIe accelerator card and Atlas 800 server began shipping in 1Q20. The Atlas 900 cluster, which we assume is primarily intended for the company’s public cloud services (Huawei Cloud), has up to 4,096 DaVinci nodes.

The Atlas 200module, which employs the Ascend 310 chip, targets edge systems that use less than 10W. To reduce power, this chip has only two DaVinci cores running at 500MHz, generating a peak performance of 16 TOPS (INT8). It also features eight Cortex-A55 CPU cores, a single Cortex-M3 CPU for real-time functions, and a video engine capable of decoding sixteen 1080p video channels at 30fps in H.264 or H.265 format. The module sports a full set of I/Os including four PCIe Gen3 lanes, USB, Gigabit Ethernet, and serial ports. Packing 4GB of LPDDR4X DRAM, the module has a typical power of 5.5W; the 8GB version requires 8W. These modules entered production in 3Q19.

To program its Atlas devices, Huawei developed a neural network framework called MindSpore, which it recently open-sourced. It also provides drivers for standard frameworks such as Pytorch and TensorFlow. It hopes to build a new ecosystem around MindSpore, but the standard frameworks allow customers to easily port existing neural networks. The company has expanded the software stack with algorithm libraries, pre-trained models, and other development aids.

Huawei has disclosed little benchmark data for the Ascend 910. It reports a speed of 1,787 IPS for a single chip when training ResNet-50—9% better than Nvidia’s V100. It also posted MLPerf Training results for a 512-chip cluster—again, only for ResNet-50—that was 32% faster than a similar cluster of V100 GPUs. On Bert-base, a single Ascend 910 achieves 210 sentences per second, about 25% ahead of the V100. All of the company’s tests employ its MindSpore software.

Conclusions

As Huawei’s first AI accelerator for the data center, the Ascend 910 is impressive. It comes within 20% of Nvidia’s A100 in peak performance on both FP16 (training) and INT8 (inference) data while using slightly less power. It’s also similar in on-chip-cache size and HBM bandwidth. More importantly, it achieves about 90% of the A100’s MLPerf score for training ResNet-50 when both are running in a 512-chip cluster. Despite being surpassed by Nvidia, the Ascend 910 gives Huawei customers a viable alternative for AI acceleration.

US sanctions, however, cut off Huawei’s supply of custom Ascend chips, and it can no longer supply systems using the accelerator, although customers can still rent it through the Huawei Cloud service. The sanctions also prevent the company from deploying design improvements, including architecture changes and a move to 5nm. In the meantime, Nvidia and other competitors will forge ahead. Until Huawei can resolve the US sanctions, realizing its AI dream will be impossible.

Recently Published AI

The era of AI is here. TechInsights provides you with unique end to end insights, from the chips to the devices to its usage.

Supreme Court Strikes Down IEEPA Tariffs | Semiconductor Impact

The Supreme Court invalidates IEEPA tariffs as the U.S.-Taiwan trade deal reshapes semiconductor import policy. Read the TechInsights report.

Chip Observer: CES 2026, AI Power Plays, and a $48B M&A Surge

CES 2026 semiconductor news: AI PCs, Snapdragon X2 Elite, $48B in M&A, ZAM memory, and a 2026 forecast projecting a $1 trillion chip market.

Intel Panther Lake on Intel 18A: Strategic & Geopolitical Analysis

Explore Intel Panther Lake on Intel 18A, examining advanced-node execution, IDM 2.0 credibility, and strategic implications for the global semiconductor ecosystem.