AMD (Advanced Micro Devices)

AMD’s latest data center GPU product, MI300X, is comparable to Nvidia’s H100 and could potentially grab market share from Nvidia in the red-hot AI market. AMD is on to its third generation of data center AI chip products. Its ROCm software stack has made significant progress in recent years, although it is yet to meet the maturity of Nvidia’s software stack.

AMD GPU products background

Advanced Micro Devices (AMD) is the second-largest vendor of PC and server processors and the only remaining Intel competitor that produces x86 processors. In 2006, the company acquired ATI, garnering a strong set of core-logic and graphics technologies. Lisa Su joined AMD in 2012 from Freescale (now part of NXP) and IBM and took the CEO spot in 2014. The company supplies PC processors branded as Ryzen and server processors branded as Epyc. The ATI product line evolved into AI products.

AMD’s graphics cards for workstation and data-center applications, such as HPC and deep learning, are sold under Instinct brand name. Initially, these products simply repurposed existing GPU architectures, but in 4Q20, the company unveiled its first accelerator based on a new CDNA architecture: the Instinct MI100. AMD introduced its second-generation CDNA product, the MI250, in 4Q21. This design helped it win bids to supply both processors and accelerators for the El Capitan and Frontier supercomputers, slated to be among the world’s fastest.

El Capitan used AMD’s follow-on GPU, the MI300 announced in January 2023. The MI300 was based on CDNA3 architecture, and was custom designed for the super computer. AMD announced a commercial version of the processor in Q4/23 called MI300X. The chiplet based design stacks comparable to Nvidia’s H100, current state of the art.

Each vendor profile provides a detailed overview of the company and its products, featuring expert analysis and insights on product strengths, potential competitors, customer names, and major design achievements. Additionally, the profiles explore the various business models and monetization strategies used by different vendors in the industry.

CDNA first and second gen GPU products

First generation CDNA architecture, used in MI100, is based on AMD’s Vega GPU design and adds a matrix unit to support AI and HPC workloads. For matrix multiplication, the new unit can perform 1,024 FP16 operations per cycle, four times as many as the Vega core. It also handles INT8 and INT4 operations at the same rate, although FP32 matrix operations are considerably slower. Combining the fast matrix unit with twice the core count enables the MI100 to achieve 185Tflop/s at 300W TDP. This performance applies only to matrix operations; general FP32 vector operations occur at half that rate.

The MI100 came with a total of 120 usable cores that offered total of 185 TOP INT8 performance. The cores share 8MB of level-two cache (L2$) and four channels of High Bandwidth Memory (HBM2). The chip employs a x16 PCIe Gen4 interface to the host processor. It also provides three xGMI ports configurable as Infinity Fabric 2.0 links, enabling a coherent GPU-to-GPU connection between up to four chips.

The second generation architecture, CDNA2 architecture extends the matrix unit to handle FP64 data at the same rate as FP32; vector performance is still half of matrix performance for both data types. The MI250 product combines two dies in one package to nearly double the core count. The MI250X has 220 cores (plus 4 redundant cores), whereas the MI250 has only 208 cores to increase yield. A shrink to 6nm delivers a small rise in clock speed to 1.7GHz, enabling the high-end model tomore than double the MI100’s peak performance. The transistor shrink keeps the power to 560W TDP, resulting inmodestly higher performance per watt.

To support the greater compute rate, the CDNA2 products double the number of memory (HBM) channels and increase the maximum data rate, reaching 3.3GB/s of bandwidth. Similarly, boosting both the number and speed of the Infinity Fabric connections greatly expands coherence band¬width. The MI250 supports Infinity Fabric links to the host processor as well, allowing it to coherently share data with certain AMD Epyc proces¬sors that also work with the fabric. The GPUs additionally implement PCIe Gen4 to connect with non-AMD host processors or other devices, such as high-speed Ethernet cards. Owing to their high power, the MI250 products are available only in an OAM form factor.

CDNA3 and MI300 products

The next-generation CDNA3 architecture used in MI300, doubles the INT8 performance per core simply by performing two 8-bit operations instead of one 16-bit operation. The CDNA 3 architecture also unifies the physical memory sharing between CPU and GPU. The new architecture enhances the compute unit to support a range of data formats for AI acceleration, including FP8, integer, brain-float, and tensor-float.

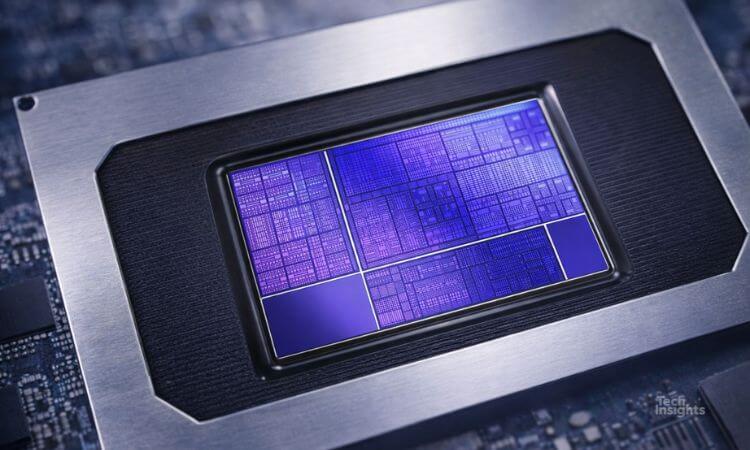

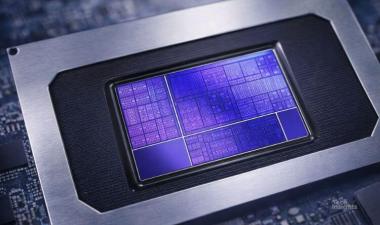

The MI300X is an enhanced commercial version of MI300 that was built for the El Capital supercomputer. The accelerator is based on innovative 3D packaging developed by AMD and offers up to 2.6 FP8 PetaOPS at a TDP of 750W. The accelerator is already in production with AMD’s lead customers that include Meta and Microsoft.

The MI300X integrates up to 8 vertically stacked accelerator complex die (XCD) and 4 IO die (IOD) containing system infrastructure. The die are connected using the AMD Infinity Fabric. The IOD connects to 8 high-bandwidth memory (HBM) die, each with 24GB, via a silicon interposer. The accelerator comes with a 16-lane PCIe 5.0 bus for system connectivity.

AMD MI300X vs AMD H100

The MI300X specs stack up ahead of Nvidia’s H100. The MI300X beats the Nvidia H100 in all compute rows. However, AMD is at least a year behind in the market as H100 has been shipping since 1Q 23. Nvidia has already announced its next-generation chip, the H200, and we expect it to have better performance.

AMD’s MI300X is available in OCP-compliant OCP Accelerator Module (OAM) form factor possibly due to its high-power consumption, and requirement of its lead customer Facebook. When the PCIe form factor is released, it could become a drop-in replacement for H100. However, we expect it to have lower performance due to the limited power available via PCIe slots.

Nvidia has a significant lead in the software though, with optimized software libraries at a low level of abstraction, and a comprehensive suite to support cloud operations. While Nvidia offers a closed ecosystem, AMD has taken an open-source approach. AMD’s ROCm software suite is an open-source suite that can be customized by customers and partners. ROCmhas had limited customer traction but sports a large collection of low-level math libraries inherited fromAMD’s historical GPU software stack.

It's critical to efficiently support large clusters of GPUs for training, and there’s little information on how well MI300X will scale in clusters. No third-party benchmarks are available for MI300X, but AMD says that its customers including Meta and Microsoft found the performance satisfactory and hence they are purchasing in large volumes. AMD expects its Data Center GPU revenue to be approximately $400 million in the fourth quarter and exceed $2 billion in 2024.

Recently Published AI

The era of AI is here. TechInsights provides you with unique end to end insights, from the chips to the devices to its usage.

Supreme Court Strikes Down IEEPA Tariffs | Semiconductor Impact

The Supreme Court invalidates IEEPA tariffs as the U.S.-Taiwan trade deal reshapes semiconductor import policy. Read the TechInsights report.

Chip Observer: CES 2026, AI Power Plays, and a $48B M&A Surge

CES 2026 semiconductor news: AI PCs, Snapdragon X2 Elite, $48B in M&A, ZAM memory, and a 2026 forecast projecting a $1 trillion chip market.

Intel Panther Lake on Intel 18A: Strategic & Geopolitical Analysis

Explore Intel Panther Lake on Intel 18A, examining advanced-node execution, IDM 2.0 credibility, and strategic implications for the global semiconductor ecosystem.